Social Interactions for Autonomous Driving: A Review and Perspective

Wenshuo Wang, Letian Wang, Chengyuan Zhang, Changliu Liu, and Lijun Sun

[Abstract]

No human drives a car in a vacuum; she/he must negotiate with other road users to achieve their goals in social traffic scenes. A rational human driver can interact with other road users in a socially-compatible way through implicit communications to complete their driving tasks smoothly in interaction-intensive, safety-critical environments. This paper aims to review the existing approaches and theories to help understand and rethink the interactions among human drivers toward social autonomous driving. We take this survey to seek the answers to a series of fundamental questions: 1) What is social interaction in road traffic scenes? 2) How to measure and evaluate social interaction? 3) How to model and reveal the process of social interaction? 4) How do human drivers reach an implicit agreement and negotiate smoothly in social interaction? This paper reviews various approaches to modeling and learning the social interactions between human drivers, ranging from optimization theory, deep learning, and graphical models to social force theory and behavioral & cognitive science. We also highlight some new directions, critical challenges, and opening questions for future research.[Bibtex]

@article{wang2022social,

title={Social interactions for autonomous driving: A review and perspectives},

author={Wang, Wenshuo and Wang, Letian and Zhang, Chengyuan and Liu, Changliu and Sun, Lijun and others},

journal={Foundations and Trends{\textregistered} in Robotics},

volume={10},

number={3-4},

pages={198--376},

year={2022},

publisher={Now Publishers, Inc.}

}

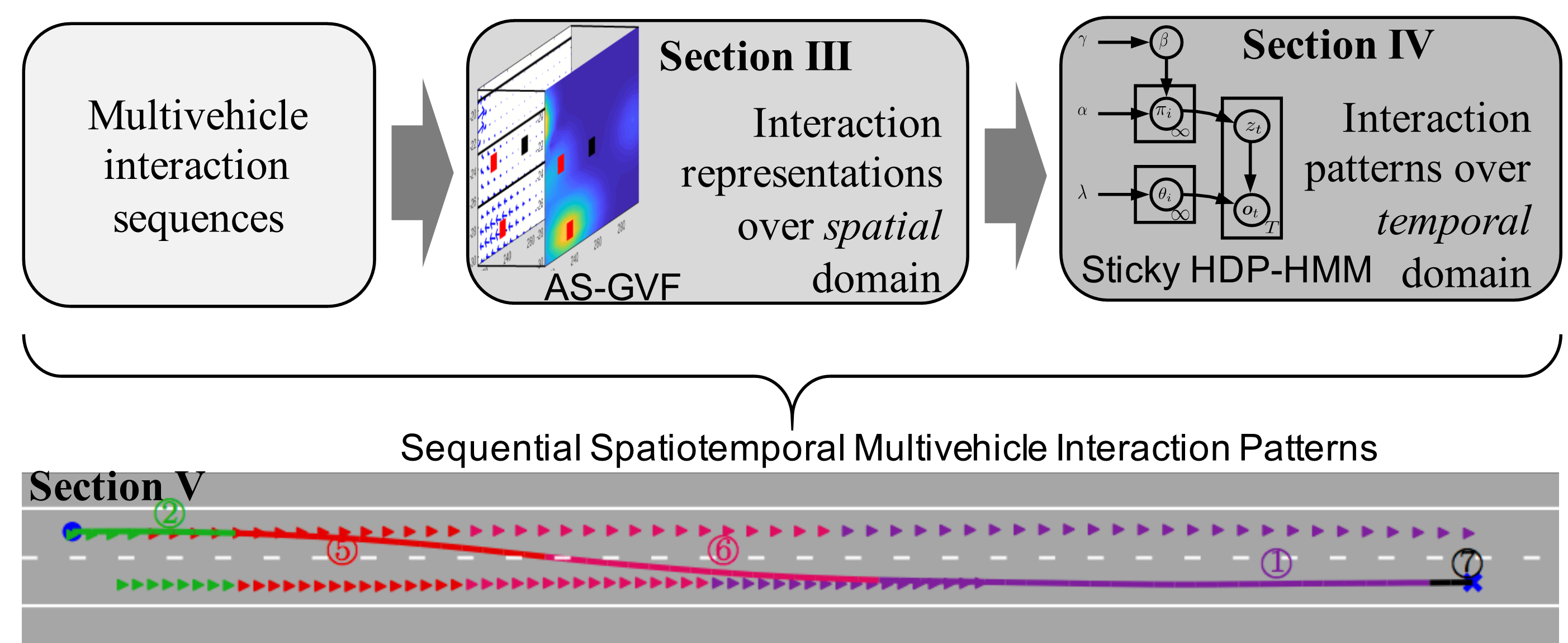

Spatiotemporal Learning of Multivehicle Interaction Patterns in Lane-Change Scenarios

Chengyuan Zhang, Jiacheng Zhu, Wenshuo Wang, and Junqiang Xi

[Abstract]

Interpretation of common-yet-challenging interaction scenarios can benefit well-founded decisions for autonomous vehicles. Previous research achieved this using their prior knowledge of specific scenarios with predefined models, limiting their adaptive capabilities. This paper describes a Bayesian nonparametric approach that leverages continuous ( i.e., Gaussian processes) and discrete (i.e., Dirichlet processes) stochastic processes to reveal underlying interaction patterns of the ego vehicle with other nearby vehicles. Our model relaxes dependency on the number of surrounding vehicles by developing an acceleration-sensitive velocity field based on Gaussian processes. The experiment results demonstrate that the velocity field can represent the _spatial_ interactions between the ego vehicle and its surroundings. Then, a discrete Bayesian nonparametric model, integrating Dirichlet processes and hidden Markov models, is developed to learn the interaction patterns over the _temporal_ space by segmenting and clustering the sequential interaction data into interpretable granular patterns automatically. We then evaluate our approach in the highway lane-change scenarios using the highD dataset collected from real-world settings. Results demonstrate that our proposed Bayesian nonparametric approach provides an insight into the complicated lane-change interactions of the ego vehicle with multiple surrounding traffic participants based on the interpretable interaction patterns and their transition properties in temporal relationships. Our proposed approach sheds light on efficiently analyzing other kinds of multi-agent interactions, such as vehicle-pedestrian interactions.[Bibtex]

@article{zhang2021spatiotemporal,

title={Spatiotemporal learning of multivehicle interaction patterns in lane-change scenarios},

author={Zhang, Chengyuan and Zhu, Jiacheng and Wang, Wenshuo and Xi, Junqiang},

journal={IEEE Transactions on Intelligent Transportation Systems},

year={2021},

publisher={IEEE}

}

- Access our paper via: [arXiv] or [paper].

- Watch the demos via: [YouTube].

- Code for implementing Gaussian Velocity Field: [Github repo].

- Also check the supplements via: [Spatiotemporal_Appendix.pdf].

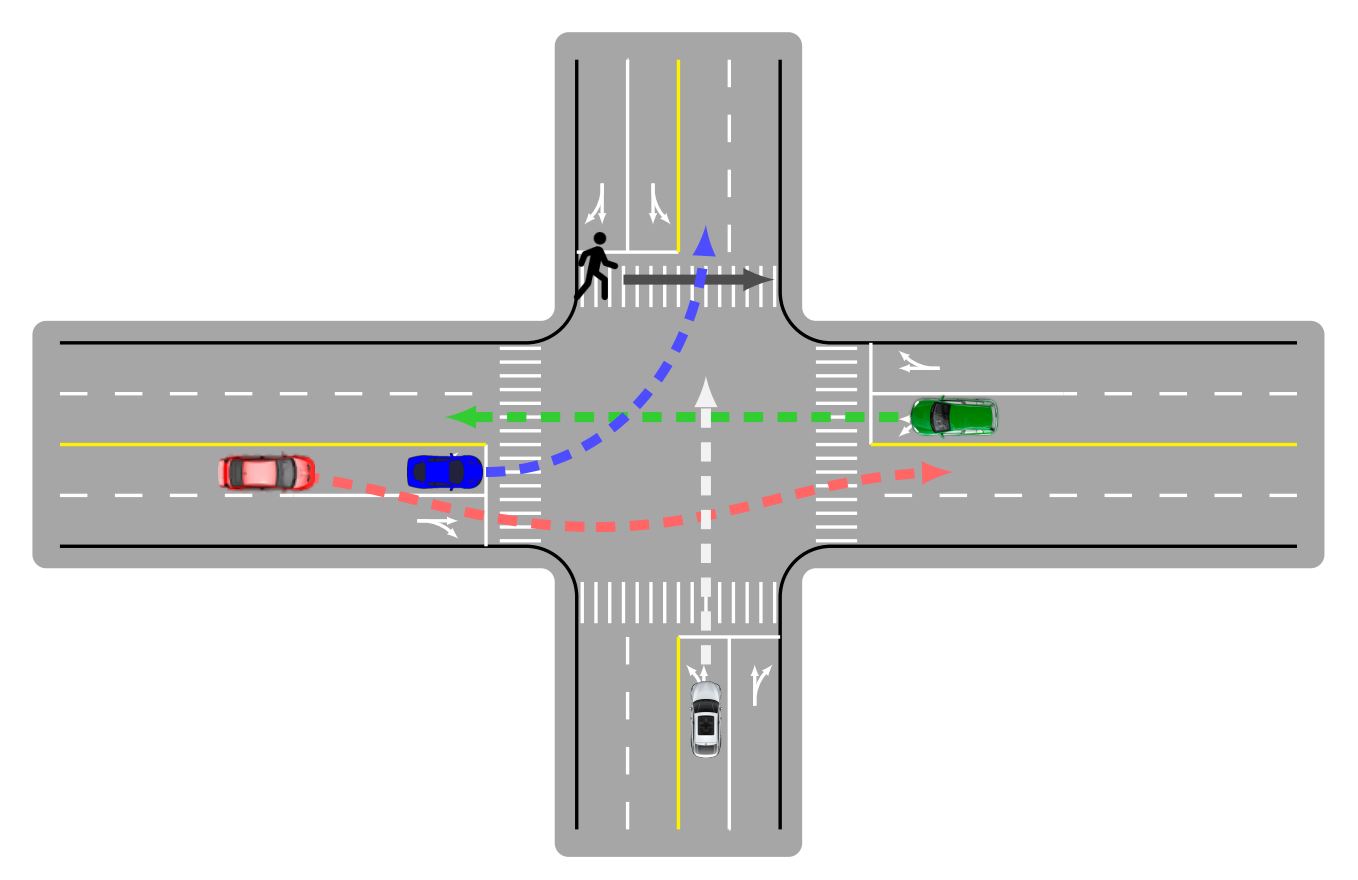

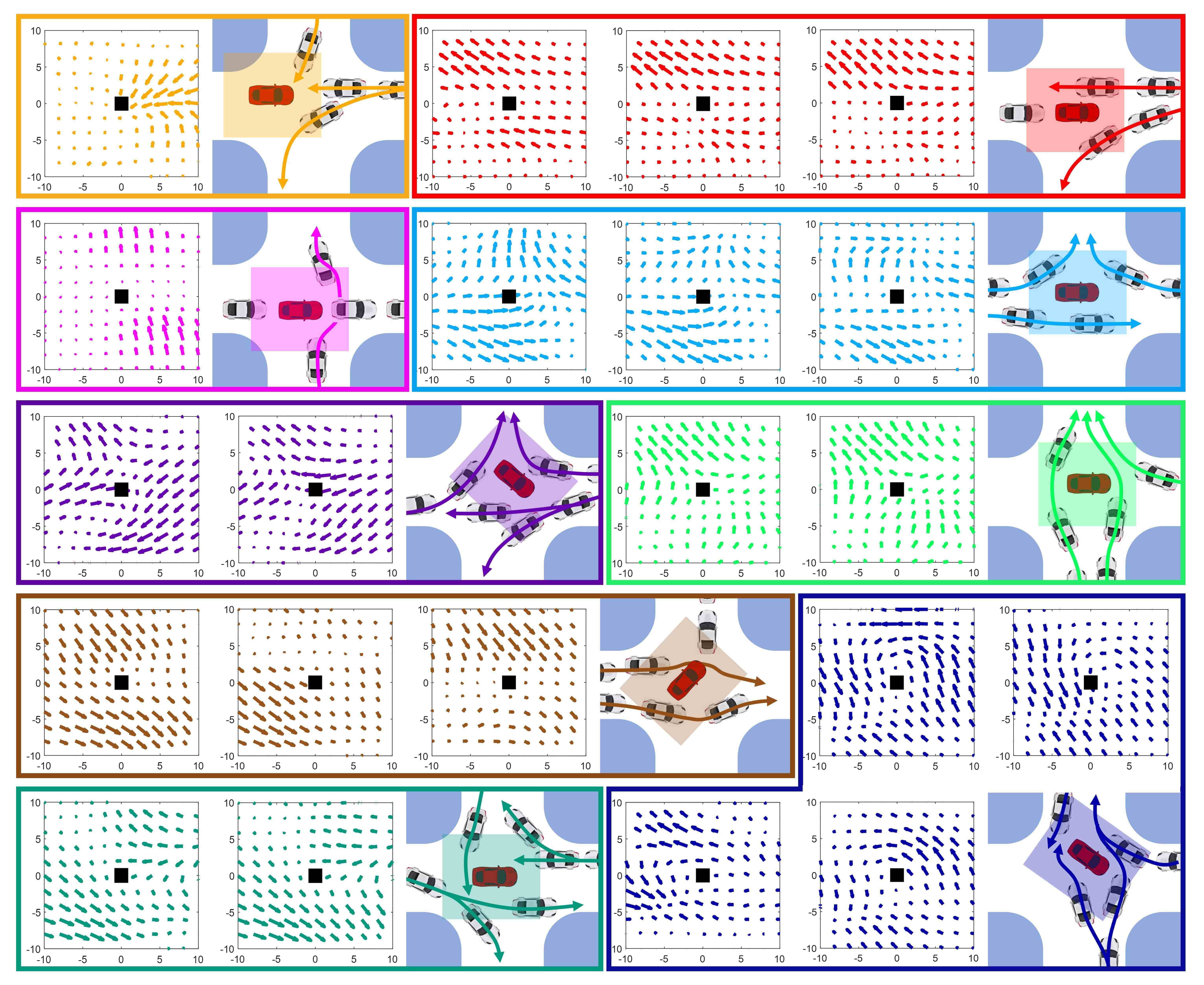

A General Framework of Learning Multi-Vehicle Interaction Patterns from Videos

Chengyuan Zhang, Jiacheng Zhu, Wenshuo Wang, and Ding Zhao

[Abstract]

Semantic learning and understanding of multi-vehicle interaction patterns in a cluttered driving environment are essential but challenging for autonomous vehicles to make proper decisions. This paper presents a general framework to gain insights into intricate multi-vehicle interaction patterns from bird's-eye view traffic videos. We adopt a Gaussian velocity field to describe the time-varying multi-vehicle interaction behaviors and then use deep autoencoders to learn associated latent representations for each temporal frame. Then, we utilize a hidden semi-Markov model with a hierarchical Dirichlet process as a prior to segment these sequential representations into granular components, also called traffic primitives, corresponding to interaction patterns. Experimental results demonstrate that our proposed framework can extract traffic primitives from videos, thus providing a semantic way to analyze multi-vehicle interaction patterns, even for cluttered driving scenarios that are far messier than human beings can cope with.[Bibtex]

@article{@inproceedings{zhang2019general,

title={A General Framework of Learning Multi-Vehicle Interaction Patterns from Video},

author={Zhang, Chengyuan and Zhu, Jiacheng and Wang, Wenshuo and Zhao, Ding},

booktitle={2019 IEEE Intelligent Transportation Systems Conference (ITSC)},

pages={4323--4328},

year={2019},

organization={IEEE}

}

- Access our paper via: [IEEE ITSC19] or [arXiv].

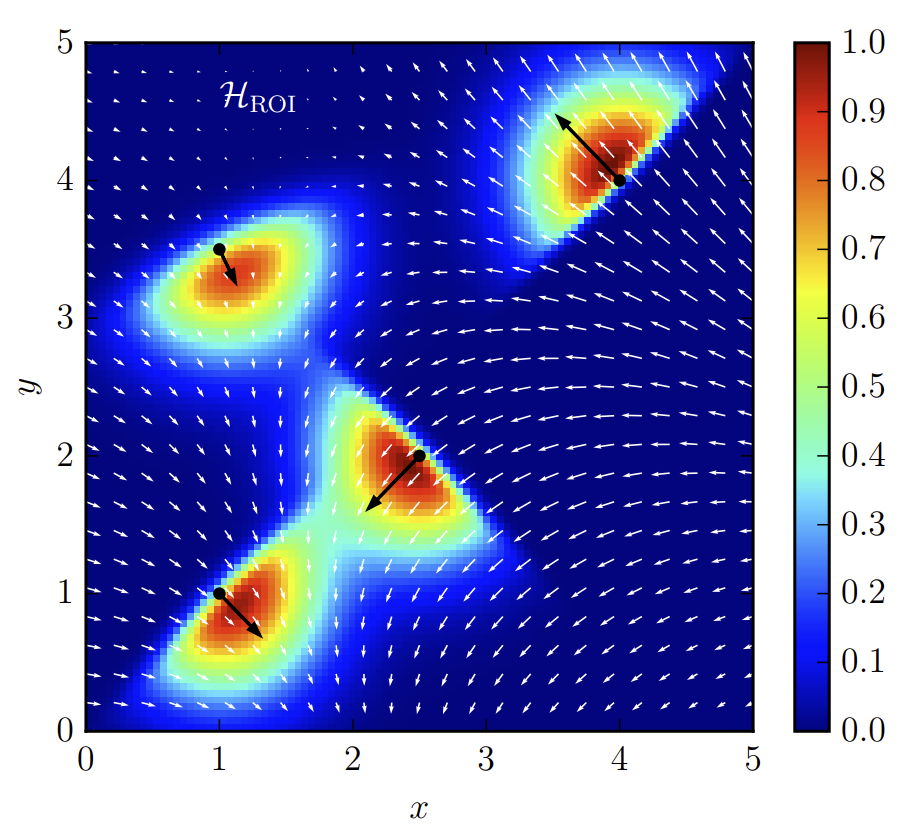

Learning Representations for Multi-Vehicle Spatiotemporal Interactions with Semi-Stochastic Potential Fields

Wenshuo Wang, Chengyuan Zhang, Pin Wang, and Ching-Yao Chan

[Abstract]

Reliable representation of multi-vehicle interactions in urban traffic is pivotal but challenging for autonomous vehicles due to the volatility of the traffic environment, such as roundabouts and intersections. This paper describes a semi-stochastic potential field approach to represent multi-vehicle interactions by integrating a deterministic field approach with a stochastic one. First, we conduct a comprehensive evaluation of potential fields for representing multi-agent intersections from the deterministic and stochastic perspectives. For the former, the estimates at each location in the region of interest (ROI) are deterministic, which is usually built using a family of parameterized exponential functions directly. For the latter, the estimates are stochastic and specified by a random variable, which is usually built based on stochastic processes such as the Gaussian process. Our proposed semi-stochastic potential field, combining the best of both, is validated based on the INTERACTION dataset collected in complicated real-world urban settings, including intersections and roundabout. Results demonstrate that our approach can capture more valuable information than either the deterministic or stochastic ones alone. This work sheds light on the development of algorithms in decision-making, path/motion planning, and navigation for autonomous vehicles in the cluttered urban settings.[Bibtex]

@inproceedings{wang2020learning,

title={Learning Representations for Multi-Vehicle Spatiotemporal Interactions with Semi-Stochastic Potential Fields},

author={Wang, Wenshuo and Zhang, Chengyuan and Wang, Pin and Chan, Ching-Yao},

booktitle={2020 IEEE Intelligent Vehicles Symposium (IV)},

pages={1935--1940},

year={2020},

organization={IEEE}

}

- Access our paper via: [paper].

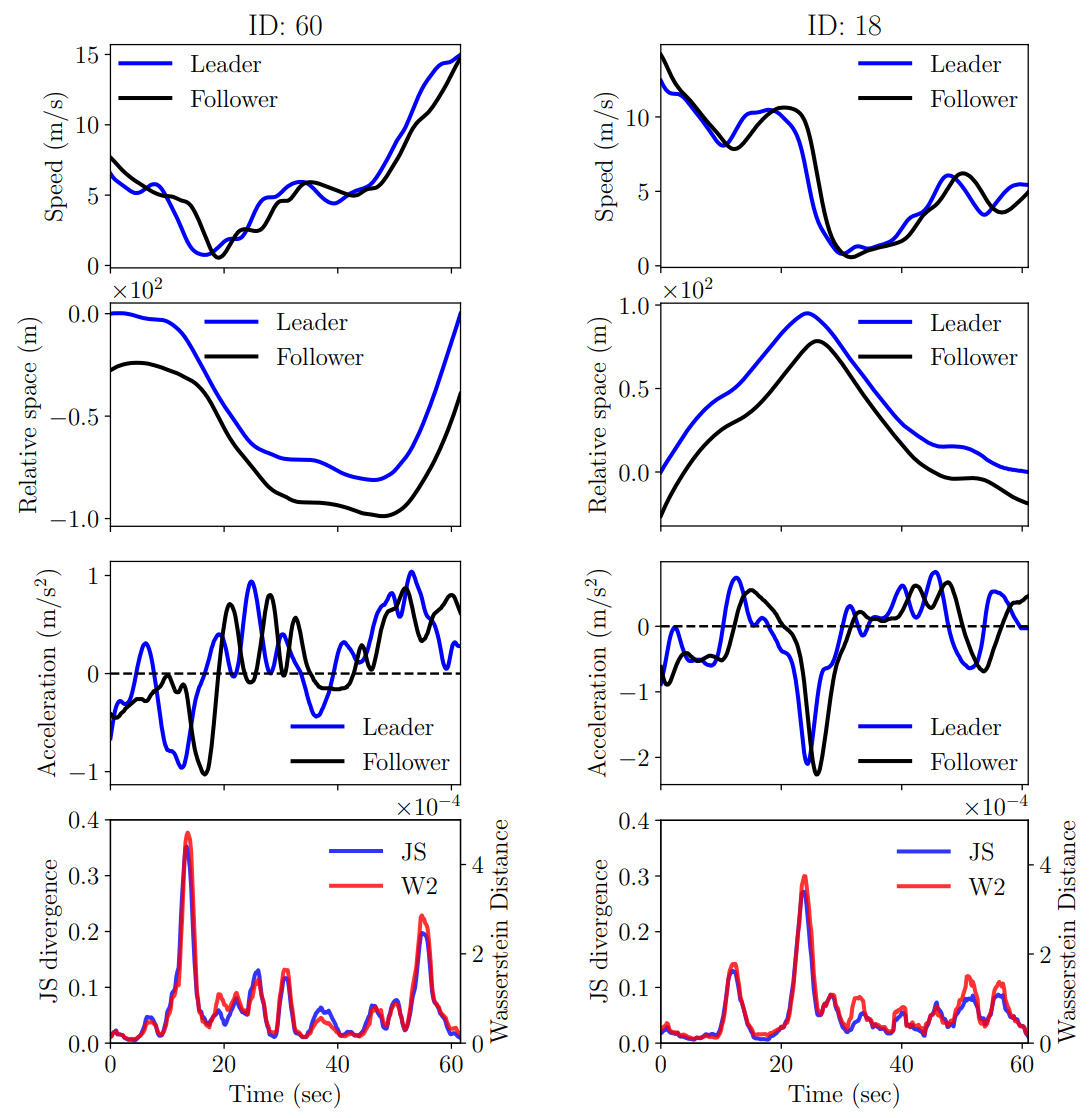

Interactive Car-Following: Matters but NOT Always

Chengyuan Zhang, Rui Chen, Jiacheng Zhu, Wenshuo Wang, Changliu Liu and Lijun Sun

[Abstract]

Following a leading vehicle is a daily but challenging task because it requires adapting to various traffic conditions and the leading vehicle's behaviors. However, the question ``Does the following vehicle always actively react to the leading vehicle?'' remains open. To seek the answer, we propose a novel metric to quantify the interaction intensity within the car-following pairs. The quantified interaction intensity enables us to recognize interactive and non-interactive car-following scenarios and derive corresponding policies for each scenario. Then, we develop an interaction-aware switching control framework with interactive and non-interactive policies, achieving a human-level car-following performance. The extensive simulations demonstrate that our interaction-aware switching control framework achieves improved control performance and data efficiency compared to the unified control strategies. Moreover, the experimental results reveal that human drivers would not always keep reacting to their leading vehicle but occasionally take safety-critical or intentional actions -- interaction matters but not always.[Bibtex]

@article{zhang2023interactive,

title={Interactive Car-Following: Matters but NOT Always},

author={Zhang, Chengyuan and Chen, Rui and Zhu, Jiacheng and Wang, Wenshuo and Liu, Changliu and Sun, Lijun},

journal={arXiv preprint arXiv:2307.16127},

year={2023}

}

- Access our paper via: [arXiv].

If you have any questions please feel free to contact Chengyuan Zhang (enzozcy@gmail.com).